Web scraping is a method of extracting data from websites automatically, using bots and tools. Companies around the world often adopt it to integrate real-time data into their business model for sustained growth. On the other hand, ethical web scraping refers to extracting website data automatically and ethically.

Sources state that over 72% of mid- to enterprise-level firms have adopted web scraping for competitive monitoring. Additionally, 60% of the marketing teams across companies adopt scraping to integrate personalization. Another report shows that the global web scraping market is valued at $501 million in 2025 and is expected to surpass $2030 million by 2035. Such a surge indicates rapid adoption and usage.

However, unethical web scraping can lead to blocking, fines, and lawsuits for data privacy violations. Hence, we will discuss ethical web scraping, its best practices, and the legal considerations in this article.

Understanding Ethical Web Scraping:

Web scraping is a technique of data collection from websites. It offers real-time and detailed data that can be integrated directly into business models for lasting success. Nevertheless, many times, web scraping is considered unethical due to legal and ethical concerns of data collection.

So, initiating and executing web scraping while keeping ethical concerns in mind is known as ethical web scraping. It is undoubtedly one of the best practices of web scraping that reduces the risks when extracting data from websites. Web scraping can be categorized into three broad types, including self-built scraping, browser extensions, and cloud web scraping.

Ethical concerns such as copyright infringement and recording of sensitive information can arise in any of the methods. To address such challenges, the adoption of ethical web scraping is crucial.

How Web Scraping Works?

The process of web scraping starts by identifying or pinpointing the data that scrapers need. However, scrapers can decide whether to extract data from the entire website or specific areas. The whole scraping process is programmed accordingly. For example, if a business needs pricing-specific data to create a pricing strategy, it will identify the specific data section for scraping.

After the identification, the bot or tool automatically extracts the data that the user needs and offers it in the form of Excel sheets or another similar format. Companies can then integrate the extracted data into their business models as per their needs.

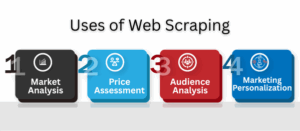

Uses of Web Scraping:

Businesses adopt web scraping for multiple purposes, including competitive analysis. Below are the key areas to discuss-

Market Analysis:

Web scraping offers in-depth and real-time data. Companies often integrate it to analyze their target industry. This approach offers a clearer picture of what their competitors are focusing on, alongside offering insights into key market trends and transformations.

Price Assessment:

Pricing products and services is a crucial yet strategic element for businesses. Web scraping helps in extracting product data and assessing which firms can plan their pricing strategy. This also offers an opportunity for competitive pricing.

Audience Analysis:

Web scraping on social media platforms helps in understanding the audience’s perspective on a product or service. Moreover, it assists in discovering customer behavior, which can contribute to product enhancement. Many adopt this approach as a feedback strategy as well.

Marketing Personalization:

Personalization has been an emerging trend for businesses in recent years. Marketers adopt web scraping to extract data from various sources and personalize their campaigns. It also offers opportunities to know the appropriate needs of the possible prospects of a business.

What happens if Web Scraping is not Done Ethically?

When scraping ignores ethics or the rules of a site, unwanted outcomes can take place. Here is a quick look:

Service Disruption and Reputation Damage: High-volume or poorly controlled crawlers can slow or crash sites, forcing operators to block entire IP ranges, sometimes cutting off legitimate traffic and partners. Recent disputes between publishers and large AI crawlers show how costly this can be in trust-building.

Technical Blocks and Wasted Effort: Sites implement rate limits, CAPTCHA, IP blocks, or more sophisticated bot mitigation if they’re being abused. That means your pipeline can break unpredictably, and maintenance costs surge if web scraping is not done ethically.

Legal and Regulatory Exposure: Collecting or storing personal data, such as emails, phone numbers, identifiers, without consent can trigger GDPR, CCPA, or other privacy law issues. Misusing copyrighted content or violating a site’s explicit terms of service may also lead to ethical notices or legal action.

In short, unethical scraping doesn’t just create disruptions; it creates real business risk.

Legal Considerations of Ethical Web Scraping:

Website scraping consists of technology, copyright, contracts, and privacy. Key legal considerations of the process include:

Public vs. Private Data:

Publicly visible pages are easier to access and convenient to scrape, but that doesn’t remove privacy or copyright regulations. Sensitive personal data should be treated as restricted unless you have clear lawful grounds to collect it.

Robots.txt and Technical Signals:

Robots.txt is a standard that communicates crawler preferences. Though it doesn’t inflict a lawful process everywhere, ignoring it can be used as evidence that scraping was intentional and reckless. Many organizations treat it as a primary consideration in the web scraping process.

Terms of Service (ToS) and Contract Law:

Websites often include ToS that restrict automated access. Violating ToS can lead to contract claims in some jurisdictions, though legal outcomes differ by case and country. Legal institutions have disagreed about how far ToS restrictions can prevent scraping. In such cases, legal risk assessment matters.

Data Protection Laws:

Privacy guidelines like GDPR (EU) or CCPA (California) can apply if scraped content contains personal data. It means you may need a lawful basis to collect it, violation of storage and deletion, and transparency to data subjects.

Copyright and training AI:

Using scraped content to train models or republish copyrighted text can raise IP issues. The legal benchmarks for training AI on scraped web data are advancing quickly. So, businesses are suggested to keep an eye on precedent and licensing options.

Additionally, construct and run a legal checklist before implementing and scaling web scraping. The checklist may include confirming the data type, checking robots.txt and ToS, and consulting privacy and IP counsel. An IP counsel consultation is necessary if you plan to keep and reuse personal or copyrighted content.

Best Practices of Ethical Web Scraping:

Ethical scraping is an easier and more reliable strategy when guided by a few practical guidelines. Below are operational practices recommended by practitioners and data-ethics professionals across industries:

1. Prefer Official APIs Whenever Possible-

If a site exposes an API that gives the data you need, use it. APIs respect the provider’s rate limits and data contracts, and they reduce the possibilities of legal risks taking place.

2. Consider robots.txt and other Crawler Signals-

Treat robots.txt, sitemap.xml, and any explicit crawler instructions as primary guidelines. If a site blocks access to certain paths, don’t crawl them. This demonstrates good faith and reduces the chance of escalation.

3. Control Requests and Respect Rate Limits-

Use polite request rates, randomized delays, and exponential withdrawal on failure. Do not overload primary servers; your scraper should look like a light human browsing pattern, not a denial-of-service.

4. Identify Yourself with a helpful User-Agent-

Set a clear User-Agent connector that identifies your organization and includes a contact email or URL. If site owners notice issues with web scraping, they can reach out rather than immediately block you.

5. Collect only what you need-

Store only the minimal dataset required for your needs and delete records when they’re no longer needed. This reduces privacy and compliance risk.

6. Build transparency and escalation channels-

Provide clear contact, an opt-out method, and a way to negotiate access for heavy or commercial use. If a site operator contacts you, respond quickly and remediate if needed. Such simple steps prevent many disputes and keep you safe from ethical violations of web scraping.

Concluding Remarks!

Web scraping is a powerful strategy for real-time market intelligence, personalization, and research. It can be an effective technique as the industries are getting more competitive every day. However, adopting an ethical approach is necessary.

Ethical web scraping reduces technical disruptions, avoids legal exposure, and sustains cooperative relationships with the sites whose data you rely on. Follow the practical rules above, such as choosing APIs where possible, controlling requests, minimizing data collection, and being transparent for successful and ethical web scraping. Such an approach not only offers valuable results but also will keep you away from legal risks.

Alongside that, adopt best practices such as collecting necessary data, building transparency, and using APIs when initiating ethical web scraping.

Read our in-depth articles and stay ahead in the tech-first age with thorough insights on the changing security parameters.

Recommended Reading:

Data Recovery After Cyberattacks: A Go-to Guide

Secure Your Data Fortress: Best Practices in Cybersecurity

All You Need to Know About Dark Web Monitoring: Protecting Your Online Footprint