We live in a world that runs on data. From the music recommendations on your phone to the navigation app that finds the quickest route home, artificial intelligence (AI) systems are working behind the scenes, learning from vast amounts of information. This data often includes details about us, our habits, our preferences, and our identities. It's only natural to ask: as these AI systems are learning from us using our data, how do we know that our personal information is being safeguarded and protected?

What is Differential Privacy?

Suppose you are being surveyed about your habits or your health. You want to assist, but nobody should know your specific responses. Differential privacy is a clever method to preserve your personal information while still enabling useful information to be received.

It does this by introducing a small amount of randomness (known as "noise") into your data. In this manner, even if someone attempts to examine it closely, they can't determine if your data was present or not. The results as a whole continue to be meaningful, yet your privacy remains intact.

For example, differential privacy in AI is a mathematical guarantee. It is a system that allows an AI model to learn broad patterns from a crowd without ever having anything specific about you. It is the distinction between knowing that "people between 30 and 40 in a town prefer a particular kind of coffee" and knowing that "John Smith, who resides at 123 Main Street and is 35, purchases a double latte each morning." The former is helpful to businesses; the latter is an invasion of John's privacy.

How Does It Work? The Magic of "Noise"

So how does this virtual protector really do it? The basic principle is to add a specially calculated dose of "statistical noise," or randomness, into the information.

Consider a town taking a survey of personal income. If there were to be one very rich individual who settled there, the town's average income would skyrocket, maybe showing that individual's wealth and presence. Differential privacy in an AI system would introduce a small bit of randomness, a dash of noise, to the results. It could shift everything up or down by a tiny, random quantity. This leaves the overall mean still highly precise for the entire group but no longer allows you to look at the result and know whether the spike is due to an actual person or simply due to the random noise added. The group trend signal is intact, but the individual data points are obscured.

How Does Differential AI Protect You?

- It conceals your specific data, so nobody knows whether you were in the dataset or not.

- It introduces random noise into the data or results, and therefore, precise details become fuzzy.

- It has a privacy setting (epsilon) to determine how much privacy is guarded. The lower the number, the stronger the privacy.

- It can be applied at various phases, such as when data is gathered, when AI is being trained, or when results are made public.

How is Differential Privacy in AI Used?

- Before Training: Noise is introduced to the data prior to its use in training the AI.

- During Training: AI is trained from the data but adds noise to the steps of learning, so it does not memorize personal information.

- After Training: Even the ultimate answers or predictions of the AI can have noise inserted to keep it private.

Best Practices

- Set a Privacy Threshold: Determine how much privacy you need to protect.

- Use Reliable Tools: Organizations like Google and IBM provide tools that help in applying differential privacy in AI securely.

- Don't Repeat the Same Data Too Many Times: Checking the same data repeatedly can undermine privacy.

- Defend Sensitive Information More: Introduce more noise to such information as health or finance data.

- Find a Balance Between Privacy and Usefulness: Too much noise will render the data useless, so finding the right balance is essential.

Why Differential Privacy in AI is Useful?

- It safeguards people without preventing useful data analysis.

- It keeps hackers or attackers from determining who's in the dataset.

- It's wonderful for sensitive data machine learning systems, such as healthcare, finance, or user behavior.

An Example from Real Life: The US Census

This method is not only a theoretical concept; some of the largest organizations in the world are implementing it to establish trust. A well-known real-life example is the US Census Bureau. During the 2020 Census, they applied differential privacy to ensure confidentiality for each individual residing in the United States.

The census gathers extremely private information, and the bureau has a mandate to keep it safe. With this method, they were able to publish accurate statistical information regarding the population, essential for distributing federal funds as well as redrawing congressional districts, without endangering someone's personal data. This is an ideal instance of differential privacy in AI and data analysis in the service of the public good with fiercely guarded individual rights.

Why Differential Privacy Is Important for the Future of AI

The work of differential privacy in AI is crucially important. The models of AI are trained on enormous datasets. Without safeguarding, the models can occasionally "memorize" special information from the data that they were trained on, and this can later be inadvertently exposed.

Differential privacy in AI serves as a protector in this training procedure. It makes sure that the AI model learns the overall, useful trends such as winter resulting in greater sales of cold medicine, without memorizing that you, individually, purchased one specific brand on a particular date. This is the basis for creating responsible AI that creates without infringing on our core right to privacy.

The demand for it has never been more urgent. As a recent IBM report states, the global average cost of a data breach rose to unprecedented levels. Consumers are ever more cautious about their data being used. Cisco's research found that the vast majority of consumers are concerned about data privacy and desire control. Differential privacy in AI provides a real answer to this increasing concern.

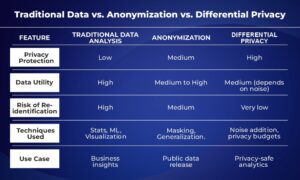

Traditional Data vs. Anonymization vs. Differential Privacy

The following table gives an overview of the differences between traditional data analysis, anonymization, and analysis with differential privacy.

Wrapping Up: Creating a Smarter, Safer Future Together

Differential privacy in AI is much more than tech-speak; it is an extension of ethical innovation. It is a deep transformation in the way we manage the world’s information. It celebrates the fact that we can continue to improve technology and get amazing insights from AI without sacrificing privacy on the altar. It is the silent protector that guarantees no one is left vulnerable as we go about building a brighter, intelligent future together. As AI becomes part of our everyday lives, using tools that protect our privacy will help make the future smarter, safer, and fairer for everyone.

To learn more, visit SecureITWorld!

FAQs

Q1. What is the function of differential privacy in AI security?

Answer: Differential privacy ensures AI systems protect personal information by introducing intelligent noise into the responses so that nobody is sure whether your data was used or not.

Q2. How can AI protect data privacy?

Answer: AI can protect privacy by using techniques like encryption, anonymization, and differential privacy to make sure sensitive information is not leaked or misused.

Q3. How does differential privacy protect individual privacy?

Answer: Differential privacy hides individual data by mixing in random noise, so even if someone looks at the results, they are not able to figure out who the data belongs to.

Read More: Why Healthcare Data Security Matters Best Practices for Safeguarding Patient’s Information?