Artificial intelligence is no longer a futuristic concept. It has become a part of our daily lives. Alongside, has begun forming industries, making things easy, and even deciding on our behalf. It could be a mere suggestion, a piece of advice, a solution to a problem, an intricate algorithm, or a health suggestion. AI has everything. This rapid development of AI has brought numerous opportunities as well as threats, so it was required to put emphasis on domesticating AI.

Taming AI is an idea that ensures the creation of artificial intelligence systems is safe, ethical, and useful for humans. It should be aligned with human values. Let us grasp the idea of taming AI in detail in this blog.

What is Taming AI?

Imagine AI as an extremely intelligent assistant. It can accomplish tremendous things, but requires guidance. Taming AI means ensuring it develops in a safe, equitable, and beneficial manner for humans. It is not about restraining it but educating it on how to obey rules, value human values, and do no harm.

Similar to the way we impose limits on children or animals, we have to do the same on AI. So it works with us, not against us. It involves:

-

- Interpreting AI Behavior: Scientists analyze how AI systems make decisions in order to decrease bias and increase transparency.

- Establishing Rules and Regulations: Organizations and governments enact legislation (such as the EU's AI Act) to regulate risks and prevent AI from causing harm to humans or infringing on rights.

- Enhancing Safety and Trust: Researchers focus on technical measures and public participation to create more dependable and accountable AI systems.

Why Do We Need to Tame AI?

AI is applied in nearly all industries, such as healthcare, education, and transport. However, if not treated carefully, it can lead to issues such as biased decisions, privacy violations, or even losing command over critical systems. Domesticating AI implies defining principles and ensuring that it adheres to human values.

For instance, an auto-car or an AI recruitment tool must be fair and secure. That's why safe AI development is not only a good thing. It became inevitable.

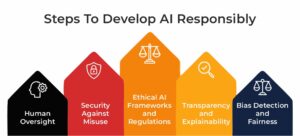

How Can We Develop AI Responsibly?

Ethical AI Frameworks and Regulations

Governments, scientists, and technology firms need to work together and create clear moral parameters. Just as we have traffic regulations to avoid accidents, AI has to be governed in order to avoid abuse. Initiatives of the sort taken by the EU in the form of the AI Act and worldwide efforts on responsible AI are a positive step.

Transparency and Explainability

AI does not have to be a black box. If an AI denies a loan application or recommends a medical treatment, we need to know why. Why is it so? Clear AI models assist in establishing trust and enable humans to intervene when necessary.

Human Oversight

Even if AI is intelligent, human judgment must always be involved. Whether doctors utilize AI tools, judges examine for legal counsel, or engineers monitor machines, human judgment maintains things safe and fair.

Bias Detection and Fairness

AI learns from data, and if that is biased data, then the AI will be biased as well. To maintain everything even for all parties, whether gender, race, or socioeconomic status, we must continue to monitor and correct any bias in AI systems.

Security Against Misuse

AI can be exploited to generate fake videos (deepfake scams), cyberattacks, or even as a weapon (AI-powered cyberattack). To prevent this from happening, we require stringent safety regulations and safeguard systems. These days, around 7 out of 10 images you see on social media are made by AI.

That’s a huge shift, and it is getting harder to tell what’s real. At the same time, deepfake scams have gone up to 6.5% globally, which is pretty worrying. It’s a clear sign that we need better tools and strong rules to keep AI use safe, honest, and trustworthy.

The Role of Businesses and Developers

Technology firms AI designers have a significant role to ensure safe AI construction. By prioritizing ethics over speed, employing equitable data, and opening up for external checks, they can construct AI that is human value-oriented.

Tech industries and universities can assist AI to develop in a smart and safe manner by collaborating with each other on open-source projects. Taming AI is not a matter of keeping progress slow. It is a matter of ensuring that it progresses in the correct direction, with responsibility and caution.

What Can Individuals Do?

You don't need to be an AI whiz to point AI in the right direction. Being aware of how AI is affecting your life, asking questions when something doesn't feel fair, and speaking up for responsible tech policies can make a difference. As consumers, we should insist on disclosure of how businesses are using AI and protecting our personal data.

The Future of AI: A Balanced Approach

Taming AI depends on making sure that it develops into a safety, fairness, and human values-aligned entity. If we combine smart innovation with responsibility, we can build a future where AI serves us all without putting our safety or fairness at risk.

AI is an extremely powerful weapon. If we handle it cautiously, it is of inestimable assistance. Taming AI is not reducing speed. It is making sure progress will not come at the expense of safety or ethics. If we make smart decisions today, we can build a future in which AI helps all of us without harming anyone.

To discover more about popular topics, drop by SecureITWorld!

FAQs

Q1. Why is human oversight necessary in AI?

Answer: Humans provide judgment, ethics, and accountability. AI lacks emotions and moral reasoning, making human supervision crucial for safe decisions.

Q2. Why is a "step toward safe AI development" so critical now?

Answer: The fast pace of AI development implies that its influence on society is increasing rapidly. Lacking a safety and ethics focus early on, we are in danger of developing systems that are biased, insecure, or have unsuspected adverse influences on our lives and our environment.

Q3. Who should ensure that AI is being developed safely?

Answer: Responsibility is held by all. It encompasses the developers and researchers of AI who design the systems, the firms that employ them, policymakers who enact regulations, and the public who need to remain educated and insist on responsible practices.

Recommended For You:

How AI Agents for Detection Optimization Strengthen Security?

AI Ethics Frameworks: 10 Essential Resources to Build an Ethical AI Framework